INTRODUCTION TO CAMERA TRACKING IN VFX

In order to sync a camera’s movements with 3D objects, a method known as camera tracking requires tracking the camera’s position in a physical environment.

Since it will guarantee an accurate solution for camera movement. Camera tracking is an essential step and one of the first activities that must complete in the VFX chain. This will have an impact on practically every other aspect of video production.

Tracking shots are used by filmmakers to draw viewers into the story by letting them travel around a scene in real-time alongside the characters onscreen.

3D Camera Tracking

You can create an animated 3D camera, a point cloud, and a scene that is linked to the solution by tracking the camera motion in 2D sequences. Or still, images using the Camera Tracker node. You can manually edit your tracks, add User Tracks or tracks from a Tracker node, track features automatically, and use a Bezier or B-spline shape to mask off moving objects. Stereo sequences and other types of camera positions can both resolved using Camera Tracker.

This method involves following a camera’s movement throughout a shot to replicate an exact virtual camera movement in a 3D animation program. In doing so, it is possible to properly match the viewpoint of the newly animated components that composited back into the original live-action frame. The most popular method for tracking the motion of a live-action is to employ a structure from a motion algorithm. Which determines the movement of the camera by combining feature tracks from numerous frames. From the camera lens, angle, and movement to the level of distortion and solid track points, or “points for confidence,” as they known from a technical perspective. The procedure entails a rigorous evaluation and analysis of the movie data.

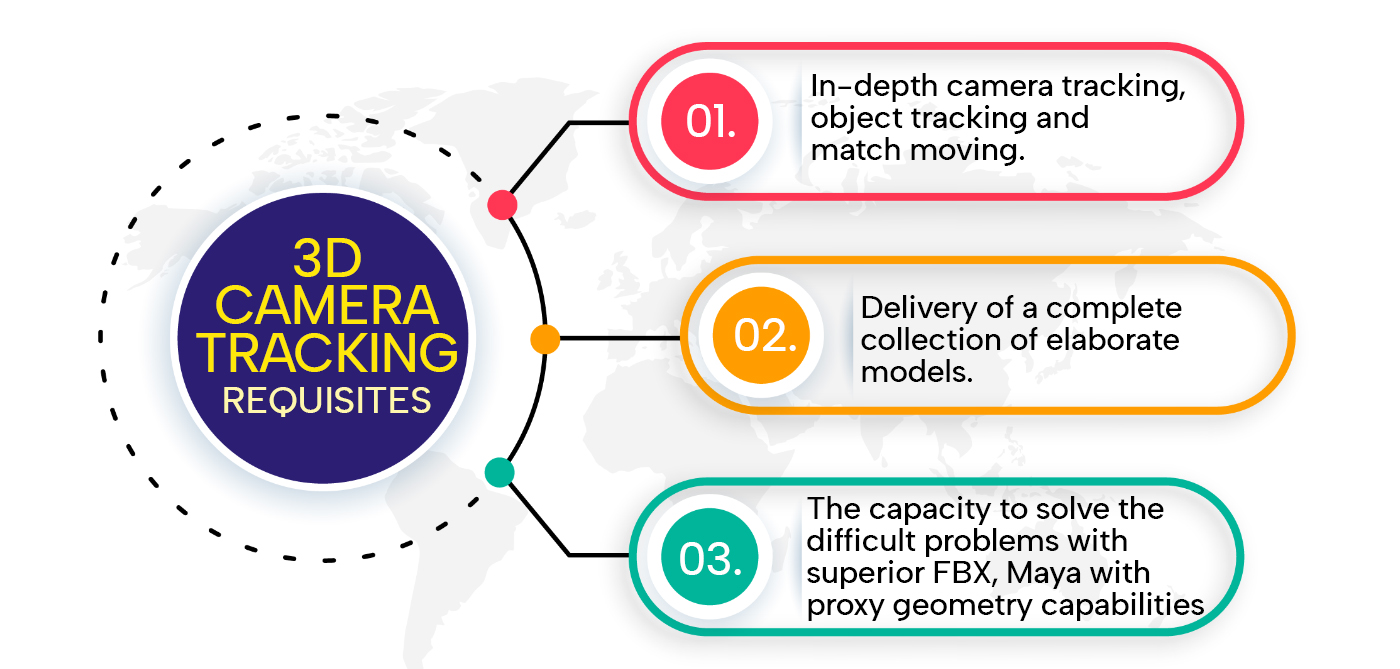

The foundation of the VFX work on the scene is reliable camera tracking. Without it, weak 3D camera tracking will produce an average outcome, regardless of how captivating the visual effects are. The foundation upon which the rest of the VFX chain is built, a precise camera track is crucial for producing high-quality results when blending Computer Generated (CG) graphics into a live-action film. Novices require assistance in successfully tackling the most challenging problems using expert knowledge and the latest techniques and technologies.

Camera Tracking in After Effects

By using the tracking technique, we may instruct After Effects to track the position, scale, and rotation of a specific region of the video. From this point, we may connect and integrate any additional element into the scene using the tracked data. There are two different ways to track. One is tracking with points and the other is planar tracking. Planar tracking employs planar surfaces present in the video to infer motion, while point tracking follows a specific pixel around the frame. The point tracking data can also used by After Effects to extract the camera motion that used to record the movie.

Camera Tracking Techniques

Filmmakers can use tracking to connect objects to camera movements, which is a great tool for creating stunning composite pictures.

In fact, most VFX artists use tracking and the stabilization. It produces on a daily basis, thus it’s important to become familiar with the method. Of course, this is just the tip of the iceberg; there are more involved procedures like 3D and Planar tracking.

Without tracking, it would be difficult to ensure that the flare moves realistically in time with the actor moving the stick; you would need to approximation frame-by-frame, which would appear quite shaky.

Since human eyes are hyper-critical of every wobble or misalignment on film. Historically this effect would have achieved by refilming images or what were termed “plates” under a film rostrum camera frame by frame. When tracking still done manually on celluloid, someone had to transfer a picture of the flare frame by frame onto the stick underneath a rostrum camera.

Brief History of Camera Tracking

In today’s digital age, we let the computer do the grunt work. The tracking software scans each succeeding frame for the same object. Or a cluster of pixels that resembles it before attaching the flare.

In the 1992 film ‘Death Becomes Her’ (directed by Robert Zemeckis), ILM (Industrial Light and Magic, the organisation that originally created Star Wars) tried tedious manual tracking for the first time. One actress’s head removed from the movie using sophisticated digital editing. And a talking head added to another actress’s body. The head had to fixed in position. This is where ILM would improve by developing a “3D” tracking technology. That would enable them to calculate depth in order to track and position computer-generated dinosaurs in three dimensions rather than simply two dimensions.

Progressing with Time

There was a desire for hardware that would enable such magic in the 1990s since it was obvious that people’s tastes were shifting towards more spectacular and fantastical themes. In 1992, a firm by the name of Discreet created a system called Flame that included an integrated tracker. Flame’s first work in visual effects was for the Super Mario Bros. movie, directed by Annabel Jankel and Rocky Morton.

Additionally, more 3D cameras and object match-moving systems arose as moviegoers sought more complex VFX. With the use of clever software, 3D computer-generated models could appear to placed in moving camera sequences and perform intricate movements as if they were actually there. The software could scan film footage, determine the 3D space it originally represented, and then offer precise data. VFX may incorporated into filmed footage transformed by software like 3D Equalizer and subsequently Boujou. This technique used in blockbuster movies like The Matrix, Lord of the Rings, Harry Potter, and other similar works.

Here are some of the advanced camera tracking techniques used in VFX:

- Matchmoving: This technique involves tracking the movement of the camera during the live-action shoot. Then using that information to create a virtual camera that matches the movement of the real camera. The virtual camera is then used to render the computer-generated elements in the same perspective. And position as the live-action footage.

- 3D Tracking: This technique involves tracking the movement of objects in the scene, in addition to the camera movement. It creates a 3D environment of the scene, allowing VFX artists to accurately place computer-generated objects.

- Photogrammetry: This technique involves taking multiple photographs of an object or environment from different angles. Then using specialized software to generate a 3D model of the object or environment. This technique is often used to create realistic digital versions of real-world objects.

- Lidar Scanning: This technique involves using a laser scanner to capture precise measurements of an environment. This data is used to create a 3D model of the environment. Which can used in VFX to accurately place and render computer-generated elements.

- Motion Capture: This technique involves capturing the movement of actors or objects in the scene using specialized sensors. This data is then used to animate digital characters or objects, creating realistic movement and interactions with the live-action footage.

Overall, these advanced camera tracking techniques are essential tools for VFX artists. This allowing them to create seamless and convincing visual effects that blend seamlessly with live-action footage.

Choose the best VFX course to get a professional hold of this technique.